Tim Backhaus

We are regularly testing AI models against each other to identify what will give our users the best outcome. Today we are sharing the results of our last testing, let us know in the comments if this is interesting to you!

We are regularly testing AI models against each other to identify what will give our users the best outcome. Today we are sharing the results of our last testing, let us know in the comments if this is interesting to you!

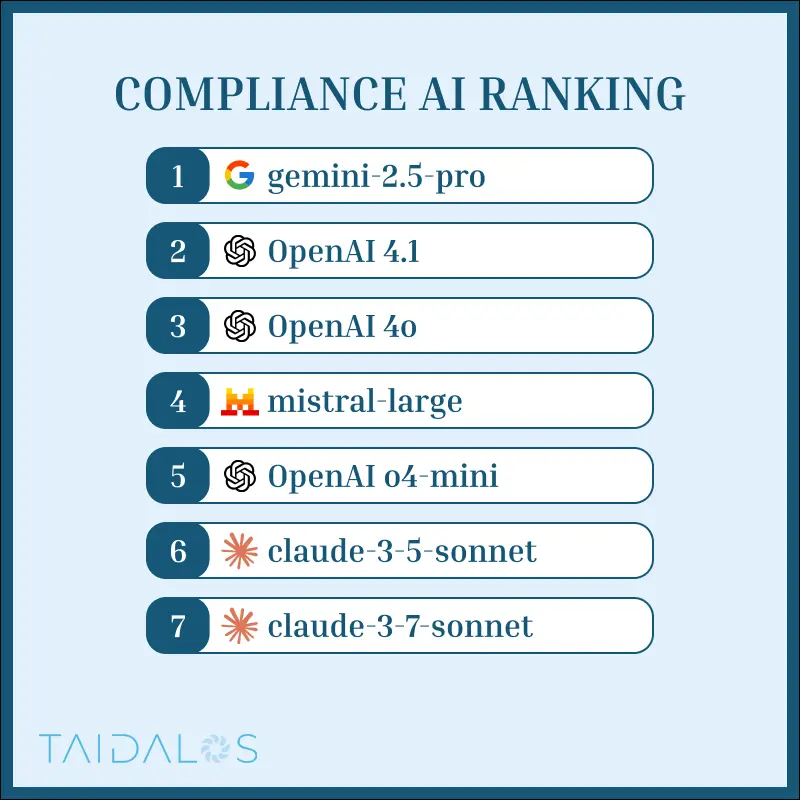

Most importantly, Google’s gemini-2.5-pro leads the list, closely followed by Open AI’s 4.1, our European player, mistral-large is also not too far behind, the anthropic models are not particularly good at compliance.

🔎 gemini-2.5-pro is very good at recall (few false negatives) and writing risk narratives short and precise.

🎯 gpt-4.1 is best at precision (few false negatives) but not as good at writing risk narratives as gemini

👍 mistral-large well-balanced model but not as good as the competitors

🙁 claude-sonnet our favorite model for programming and day-to-day AI tasks, but is not good at compliance

📈 When comparing our testing scores over time it is also crazy that we are 3.5 times better than 1 year ago with gpt-4